The Agentic Maturity Curve

February 10, 2026

Dan Shapiro recently proposed a five-level model for AI-assisted programming that’s been rattling around in my head since I read it (hat tip to Simon Willison for amplifying it). It’s useful partly because it’s a good model, and partly because I think it applies to far more than just writing code.

If you’ve spent any time in analytics or data strategy, you’ve seen maturity models before. Gartner loves them. Every consulting firm has their version. They usually describe a journey from chaotic, ad-hoc practices toward something systematic and optimized. Shapiro’s model does the same thing for AI collaboration, but with a twist: the endpoint isn’t just “optimized.” It’s autonomous.

Here’s the progression:

Level 0: Spicy Autocomplete. The original GitHub Copilot. Copying and pasting snippets from ChatGPT. The AI suggests, you accept or reject. You’re still doing the work—the AI is just a faster search engine with better guesses.

Level 1: Task Automation. AI handles discrete tasks: “Write a unit test” or “Add a docstring.” Speedups exist, but your role and workflow remain largely unchanged.

Level 2: Pairing. You get into a flow state. You’re more productive than you’ve ever been. Shapiro notes this is where most AI-native developers live today—and where many mistakenly believe they’ve hit the ceiling.

Level 3: Code Review Manager. Your life is diffs. AI agents generate solutions; you review constantly. You’re still hands-on, but the balance has flipped. The developer becomes a human-in-the-loop manager.

Level 4: Specification to Shipping. You’re not a developer anymore. You’re a PM writing specs and designing agent workflows. You step away while systems build complete features, checking test results when you return. Shapiro places himself here.

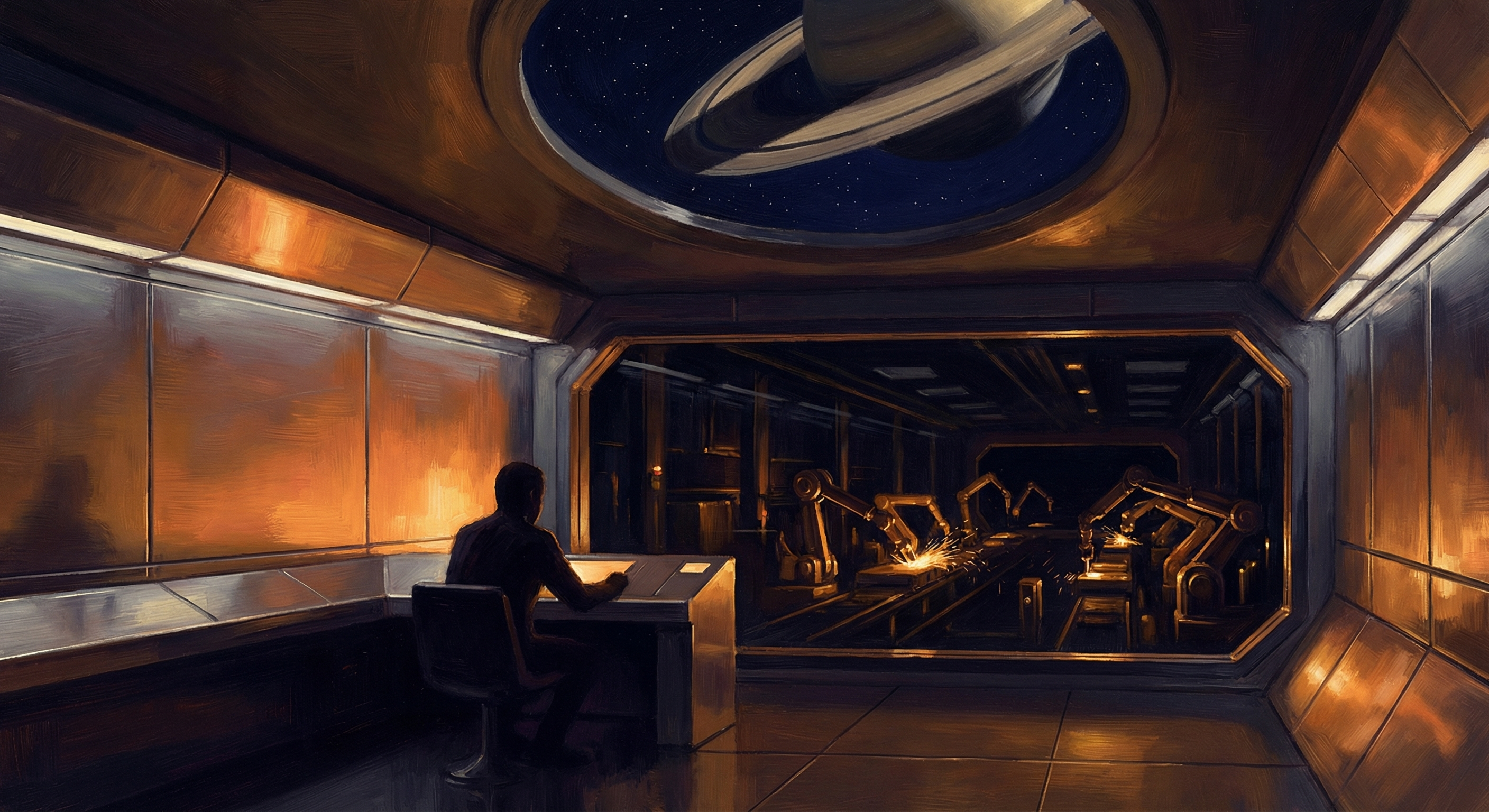

Level 5: The Dark Factory. Named after Fanuc’s robot-staffed manufacturing facility where the lights stay off because robots don’t need to see. At this level, the system takes specs and produces software. Humans design the process and handle exceptions, but they’re not in the loop for normal operations. Think this is sci-fi? There are companies already trying it.

Beyond Code

What strikes me about this model is how little it depends on the output being software.

A marketing team at Level 2 is pair-prompting on campaign copy. At Level 3, they’re reviewing AI-drafted campaigns and approving the good ones. At Level 4, they’re defining brand guidelines and letting agents produce variations. Level 5 is programmatic creative at scale—personalized ads generated and deployed without human review.

The model generalizes because the underlying dynamic is the same: as trust in the system increases, humans move from production to oversight to design.

The Post-Data World

For data and analytics, I think this maturity curve points toward something more fundamental: a world where analytics disappears into the workflow.

Data exists to tell us something about the world we can’t directly observe. “Where should I focus my efforts?” gets answered by seeing sales are down in the west. “What should I do about it?” might come from customer satisfaction trends or competitor pricing seen in the data. But the goal was always to sell more, stay profitable—not to find the best sales report.

I wrote about this back in 2024: the job to be done is never the dashboard or chart itself. The dashboard is a means to an end, and often a clunky one.

At higher levels of agentic maturity, you stop going to your data. The data comes to you. An agent helping you plan your quarter already knows sales are down in the west—you don’t need to check a dashboard first. An agent drafting your pricing strategy has already incorporated competitor intelligence. Analytics becomes infrastructure, not a destination. The insights are woven into the tools you use, surfaced when relevant, invisible when not.

This is the promise of Level 4 and 5 for knowledge work: not just automating the production of reports, but eliminating the need to consume them as a separate activity.

Where Are We?

Shapiro suggests Level 2-3 is where serious AI users live today, with many mistakenly believing they’ve reached the ceiling. That matches my experience. The people I know who’ve really integrated AI into their work have largely stopped writing initial code drafts. They’re reviewing, refining, redirecting. They’re managing output, not producing it. In fact, it’s our senior engineering managers who seem to be sprinting ahead the fastest.

Level 5 remains rare. The teams operating there are typically small—under five people—with extensive experience in high-reliability systems. They’ve invested heavily in testing, tooling, and validation. The “dark factory” isn’t about removing humans. It’s about moving humans to where they add the most value.

The Maturity Question

Maturity models are useful because they give you a vocabulary for talking about where you are and where you’re going. They’re dangerous because they imply a linear progression that may not exist. Not every team needs to reach Level 5. For some work, Level 2 is exactly right—the collaboration itself is valuable, and automating it away would lose something.

The question isn’t “how do I get to Level 5?” It’s “what level is appropriate for this work, and am I there?” At the same time, knowing that Level 5 might be the end goal can also help you understand the things you can start standardizing (like processes, knowledge, skills) on your way to producing a mostly autonomous factory.

More practically for today, if you’re reviewing every line of AI output but the stakes are low and the volume is high, maybe you’re overinvesting in review. If you’re pushing toward automation but your testing infrastructure can’t catch regressions, maybe you’re moving too fast. If you’re still going to a dashboard every morning to understand your business, maybe there’s a more integrated way.

The Agency in Agentic

The people moving up this curve aren’t waiting for permission. They’re not sitting in Level 1 hoping someone will tell them it’s safe to try Level 2. They’re experimenting, failing, adjusting, and building confidence through practice.

The dark factory sounds like science fiction. Some days it feels closer than others. But getting there—if that’s even where you want to go—requires the same thing every maturity model requires: deliberate, iterative improvement driven by people who choose to push the boundaries.

Like many areas of life, we get to decide how much to trust. That decision isn’t automated. It’s yours, and it should be intentional.

Where do you sit on the agentic maturity curve? I’d love to hear about it—reach out on LinkedIn or Bluesky.